Cache

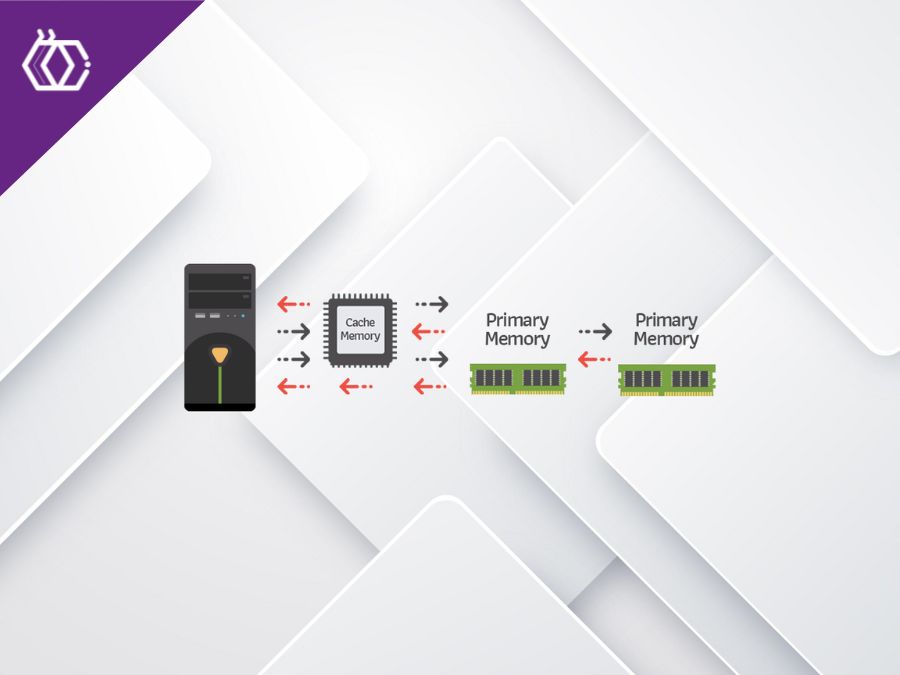

(6 minutes of reading) Performance optimization is a constant concern for software developers, and one of the key elements in this context is cache memory. Understanding how cache memory works and how to use it efficiently can have a significant impact on the speed and efficiency of a program. Let's explore this crucial concept for software development. WHAT IS CACHE MEMORY? Cache memory is a type of fast, temporary access memory that is located between the central processing unit (CPU) and main memory (RAM) of a computer. Its main purpose is to store data temporarily to reduce the CPU access time to that data. When a program runs, it continually accesses data in RAM. Cache memory acts as an additional layer of storage that contains copies of the most frequently used or expected data. This is done to prevent the CPU from having to constantly fetch data from main memory, which is slower compared to cache memory. Cache memory is organized into levels, generally referred to as L1, L2, and L3, each with different levels of proximity to the CPU and storage capacity. By utilizing cache memory, frequently accessed data can be retrieved more quickly, significantly improving overall system performance. The effectiveness of cache memory lies in exploring the principles of temporal locality (reusing recent data) and spatial locality (accessing data close to those already used). These principles help minimize the impact of slower main memory access times. TYPES OF CACHE MEMORY There are generally three cache levels (L1, L2 and L3), each with different sizes and proximity to the CPU. The closer to the CPU, the smaller the cache size, but access is faster. Below we will detail each of them: L1 Cache: The fastest and closest to the CPU, generally divided between instruction cache (code) and data cache. L2 Cache: Located between L1 and main memory, offering a balance between speed and capacity. L3 Cache: Shared between CPU cores, provides a larger reserve of data with a slightly slower access time. ADVANTAGES OF USING CACHE MEMORY There are many advantages to using cache memory, below we list some of the main ones. Speed of Access: Faster access to frequently used data, reducing latency. Reduction of Traffic in Main Memory: Reduces the load on main memory, avoiding bottlenecks in the system. Power Saving: Fast cache access allows the CPU to enter low-power states more frequently. TIPS FOR OPTIMIZATION Access Standardization: Take advantage of temporal and spatial locality, accessing data close in time and space to maximize the benefit of the cache. Conflict Minimization: Avoid access patterns that result in cache conflicts, which can decrease efficiency. Appropriate Size: Choose the appropriate cache size for the application. Bigger sizes don't always mean better performance. Usage Profile: Analyze your application's data access pattern and adjust the caching strategy accordingly. Cache memory plays a crucial role in optimizing system performance. Understanding how it works and applying efficient usage strategies can result in substantial improvements in the speed and efficiency of your applications. Be aware of your data access profile and adjust your caching approach as needed to get the most benefit.

Share this article on your social networks:

Rate this article:

[yasr_visitor_votes size=”medium”]